Takashi Someda

Machine vision technologies continue to evolve with the market. Pattern matching-based machine vision algorithms, for example, have made way for artificial intelligence (AI) techniques such as deep learning. Beyond deep learning, however, other types of AI software offer unique benefits for machine vision systems. Sparse modeling, for example, presents a novel approach to data modeling that proves especially useful for image-based detection tasks using small datasets. The following article describes this data modeling approach and compares it deep learning techniques.

Deep learning vs. sparse modeling

A subset of machine learning, deep learning attempts to composite a representation from raw data by extracting inferences through multiple layers (hence deep), similar to how the human brain learns or processes information. By default, this process requires large amounts of data. Deep learning methods suit anomaly detection due to their ability to understand complex structures in data. However, deep learning does not suit all machine vision applications.

Oftentimes, the conditions required to effectively deploy deep learning include large volumes of defective sample data and a significant infrastructure for analyzing the data are not available. The black box nature of the deep learning decision-making process, particularly when using unsupervised learning, presents another potential drawback, as the ability to show the multiple layers processed that lead to a solution is lost.

Toyota (Aichi, Japan; www.toyota.com) famously established a method called the Five Whys, which offers a way to establish the root cause of a problem by asking multiple layers of why questions. While deep learning offers an effective method for detecting what a defect is, it does not answer why it is a defect. If detecting an anomaly to prevent repetitive occurrences represents the goal, knowing why is just as important as knowing what, and deep learning falls short here.

AI based on sparse modeling, on the other hand, provides learning and inference extracting capabilities from a small amount of data while being explainable in a human context, but doesn’t suffer from characteristics stated above and still delivers accurate results. Sparse modeling works under the basic principle that most data features are insignificant and that insights can be derived from a relatively small amount of essential information.

Deep learning focuses on analyzing functions or the correspondence between input x and output y from large datasets by using a forward problem approach which observes input x in order to infer output y (or how to predict output y). Conversely, sparse modeling analyzes complex data from a small number of integrated causes using an inverse problem logic which observes output y to extract the causality from the observable data or to infer why input x causes y. Because it uses less data by nature, the total energy and compute power required to process data in sparse modeling is less than deep learning.

Related: Machine vision system deploys novel deep learning software

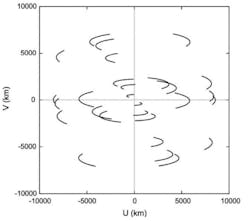

One potentially well-known example of sparse modeling in use is when the international Event Horizon Telescope captured the first image of a black hole. The team captured partial images of the black hole using very-long-baseline interferometry (VLBI) which produces very small features on an astronomical scale (Figure 1). In the graph, U and V represent physical location of an individual ratio telescope spreading over the Earth’s surface. As the earth spins, the telescope location relative from the far-space gradually changes. Each trace stands for location change of the specific telescope. Since more than 10 radio telescopes spreading over the earth performed the black hole imaging, the graph shows more than 10 traces (See here for more information: (bit.ly/VSD-SPARSE). This data helped produce the first image of the black hole known today.

Another benefit sparse modeling offers is the ability to create portable tools for training and inference on edge devices without the need for graphics processing units (GPU). In other words, sparse modeling software can run in GPU-less environments on a central processing unit (CPU), allowing for deployment in sites with legacy hardware or integrated into existing hardware.

On the other hand, deep learning may require cloud platforms for handling the workloads for training and re-training AI models, forcing users to communicate sensitive data to external sources. Doing so can lead to cybersecurity risks becoming a major concern. With sparse modeling it is possible to perform edge training, removing the need to send data to an external location (e.g., a server in the cloud) resulting in fewer data security concerns. New configurations of the inspection target or image conditions can be set up without internet connection or intermachine data-transfer, providing confidential data protection.

Visual inspection use cases

HACARUS (Kyoto, Japan; www.hacarus.com), offers sparse modeling software and has developed custom client solutions in disparate fields. SPECTRO, the company’s visual inspection software, targets substrates, precision parts, metals, plastics, and food inspection applications. The software provides easy-to-understand outputs such as heat map visualizations, providing transparent explainability and intersection over union (IoU)/anomaly scores for more efficient evaluations and inspections. SPECTRO CORE takes the inspection algorithm of SPECTRO and allows system integrators and machine manufacturers to incorporate SPECTRO into factory equipment or edge devices.Manufacturing defects in photovoltaic solar cells such as material defects, finger interruptions, microcracks, and cell degradation can lead to the development of hot spots on the solar panels. These hot spots can even cause fires if left unchecked. Cornell University (Ithaca, NY, USA; www.cornell.edu) conducted a study using convolutional neural network and support vector machine techniques to detect defects. For comparison, HACARUS applied SPECTRO to the same problem to benchmark sparse modeling against these approaches (Figure 2).

SPECTRO assessment results show promise as the system uses 92.5% fewer images while delivering higher accuracy at 90%. The software is also considerably faster both in training and prediction. Though results will vary depending on individual cases, the comparatively low number of images required to accurately detect anomalies may be attractive in industries where examples of bad data are rare and insufficient to build a model using other techniques.

In another use case involving a construction material producer, the company sought a solution for supplementing their existing inspection system without changing any processes or machinery. Specifically, the company had an issue with the existing inspection system failing to catch a specific defect in gypsum ceiling tiles (Figure 3). Solving this issue would benefit the company by improving product quality and lowering costs associated with defects. Using 200 1600 x 1200 pixel training images of good samples, HACARUS used SPECTRO inspection AI on 10,000 product samples. The training time took approximately two minutes to produce a model with a prediction time of approximately 300 ms per image and an accuracy of 99%. This was done without the use of GPU on the company’s local hardware (CPU).

Conclusion

Deep learning performs well when sufficient data is available, annotations can be prepared, and the weight of other possible constraints is relatively low. However, sparse modeling based AI, although relatively unknown, produces good results that are explainable even from a small amount of data.

This model can be an alternative solution to deep learning, or the only solution, based on the availability of data, time, and costs. The added advantage of being lightweight with the capabilities to perform inference and training on edge devices adds another layer of differentiation that may make sparse modeling based AI more easily accessible for Industry 4.0 and IoT technologies.

Takashi Someda is Chief Technology Officer at HACARUS (Kyoto, Japan: www.hacarus.com)